This post follows on from our AWS Nova log benchmarking article, where we explored how smaller LLMs like Nova Micro perform on log analysis tasks compared to larger models like GPT-3.

That earlier post also highlighted that LLMs are surprisingly good at parsing logs. While that work focused on understanding logs, this post tackles an earlier step: automatically structuring logs using AI.

Logging Origins

Logs are one of the oldest - and still most valuable - forms of observability. Mainframes and early Unix systems were already using logs to record system activity, with tools like syslog dating back to the early 1980s.

Even as systems have become more distributed and complex, logs remain foundational, especially for trying to investigate issues when things go wrong! Logs are typically written to local files for simplicity and reliability before being shipped to modern observability platforms such as Bronto using agents like OpenTelemetry or Fluent Bit - as outlined in our setup guide.

Why so many formats?

The OpenTelemetry (OTel) project is encouraging the adoption of structured JSON logs - and that’s a good thing. Structured logs are easier to search, are more human readable, safer to manipulate, and more cloud-native.

But the reality is not that simple, as there are still many systems that generate unstructured or semi-structured logs where key=value pairs are embedded inside free-text messages. And even among structured logs formats can vary wildly. Timestamps alone appear in many different formats.

Logs reflect the unique fingerprint of each tech stack:

- syslog is still widely used - with quirks in its timestamp formatting.

- Apache uses the Common Log Format.

- nginx has its own custom variant.

- Java apps use logback, log4j, or slf4j.

- AWS services often emit structured JSON or a hybrid of text and JSON.

With no single standard, Bronto set out to solve the problem in an innovative way - using AI to generate parsers automatically, reducing the toil and complexity that users typically face.

Automated Log Parsing

Parsing logs in real time is a performance critical operation. When you’re ingesting millions of events per second every millisecond counts. Regex-based parsing can be complex and difficult to maintain and require expertise in tools like Grok or Dissect and such parsing may become a bottleneck at scale, especially when applied indiscriminately.

At Bronto, we use a multi-layered approach to log parsing which separates offline detection from online parsing. Online parsing happens in real time as part of the ingestion pipeline, while offline detection occurs outside of the ingestion pipeline and involves a short delay. This hybrid approach ensures speed without sacrificing flexibility, while reducing user toil, something many platforms struggle to balance.

Curated Java Parsers

We maintain a library of high-performance Java-based parsers, optimized for the most common formats seen in high volumes across multiple customers. These are purpose-built for speed and reliability and are designed to fail fast if they encounter a log that doesn’t match their expected format.

If a log event matches one of these Java parsers, we apply it automatically and extract key fields.

After parsing, we apply additional lightweight processors to normalise important items in order to make it easier to view logs and build queries for those logs:

- Timestamp parser - auto-detects and normalizes varied timestamp formats.

- Log level parser - maps diverse severity keywords into five standard levels.

- KVP parser - extracts key=value pairs from the message or body, even if they’re only present in some events.

Dissect and Grok Fallback

For less common but still important formats, we fall back to Dissect or Grok:

- Dissect is fast and great for structured, delimiter-based logs.

- Grok is more flexible, supporting regex-based parsing, but comes at a performance cost.

Bronto maintains a large database of both dissect and grok patterns. However, due to their runtime cost, we don’t attempt to apply every pattern to every event online.

Instead, we:

- Sample log events offline.

- Match them against our full pattern library.

- If a match is found, we automatically assign a parser hint to the dataset.

- Future log events in that dataset are parsed using the matched pattern.

- We gather metrics of how well the logs are parsed for each dataset and periodically revalidate hints to ensure accuracy.

AI-Generated Parsing

When we encounter unknown or proprietary formats, other tools might require users to handcraft regexes or pattern rules through a UI.

At Bronto, we take a different approach: we let AI do the work.

.png)

When enabled, we send a sample of the dataset to an internal AI engine that analyzes the log structure and generates a custom dissect pattern. We then test the pattern against a wider sample of logs. If the suggested pattern matches a high percentage of events, we present the pattern and sample results to the user. They can tweak field names if desired - and once approved, the parser is saved and applied automatically to all future events in that dataset.

This AI-assisted approach is faster, more scalable, and dramatically reduces the manual effort required to onboard new log formats.

Example

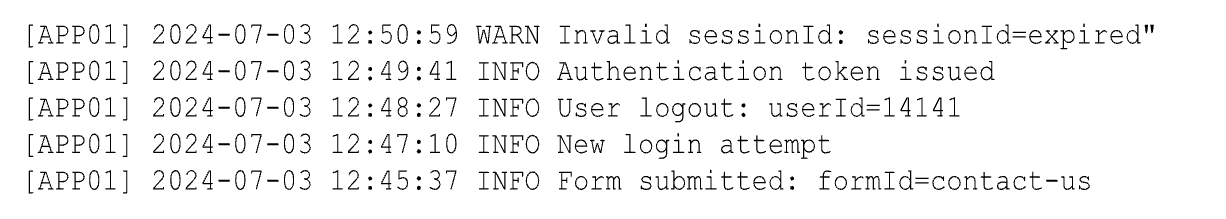

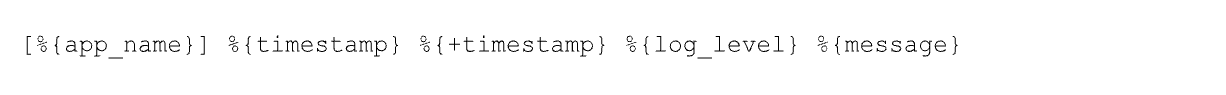

Suppose your application logs look like this:

We analyse hundreds of lines of logs and generate the following pattern:

The parsed log will log like this:

Then, our KVP parser can further extract fields like sessionId, userId and formId from the message value.

.png)

We use AWS Bedrock as a managed service to access LLMs, e.g. Claude. Our infrastructure chooses the most appropriate model to use for a given application and sends a structured prompt or prompts to the LLM, e.g. our AI log parser builds a prompt including instructing the LLM which patterns to avoid and how to handle keys such as timestamps. So the user does not have to worry about models or prompts - they just use our application.

Bedrock also provides a number of other benefits for use in a SAAS environment:

- built-in safeguards to detect and filter harmful content

- it never stores or uses our data to train models

- all data remains within the AWS network

- it works seamlessly with services like Lambda and S3 allowing us to incorporate AI into our platform without having to rearchitect systems.

Looking Ahead

At Bronto we believe parsing should be fast, accurate and hands free. Today we generate dissect patterns using AI. Soon we’ll be generating Grok patterns too - bringing AI to even more complex and less structured formats.

As OTel continues to push for JSON-based structured logging, the hope is that log parsing becomes a less painful problem. But until then, automated, adaptive parsing isn’t just a convenience – it’s a necessity.

Summary

Bronto combines curated Java parsers, flexible Dissect/Grok matching, and AI-powered pattern generation into a unified pipeline for parsing any log format, structured or otherwise.

If your logs are weird or messy – we’ve got you.

.png)

.png)

.png)