.png)

At Bronto we have been working on different ways to integrate AI into our product. Given the rate of progress with LLMs over the past few years it’s becoming easier to leverage these technologies to improve existing features and products. For product builders the challenge is in finding the right application of these technologies so that it fits your product and more importantly, the users of the product.

While it’s a good thing that the integration of AI assistants is so trivial these days, it’s important to do so in a way that is actually useful.

At Bronto our goal is to integrate AI in a way that helps users by removing toil from their existing workflows and ultimately saving them time.

The problem with dashboards

Any log management tool needs to have capable dashboarding functionality so that users can quickly gain insights based on their log data. At a quick glance you should be able to tell the health of your services, any errors that have occurred, anomalies in your log data and more.

In order to create effective dashboards someone has to set them up manually by creating each widget, specifying the datasets that the widget should use and any filters or aggregations that should be applied, as well as the visualization best suited to displaying the data. Not everyone on a team will have the context to do this, but that shouldn’t stop them from being able to get up and running with a useful dashboard.

Bronto approach: AI assisted widget creation

That’s why we’ve introduced an AI widget creator in Bronto as shown below. You can simply ask Bronto to create the widget for you and tell it exactly what you want in plain english. The dataset selection and any filters or aggregations will be automatically applied for you.

For example if you are a user who has no context on the source of certain log data, but you know there was an error in the last hour, you could ask Bronto to “show me the errors in my logs in the last hour”

After submitting the prompt the user instantly sees data on what is happening behind the scenes.

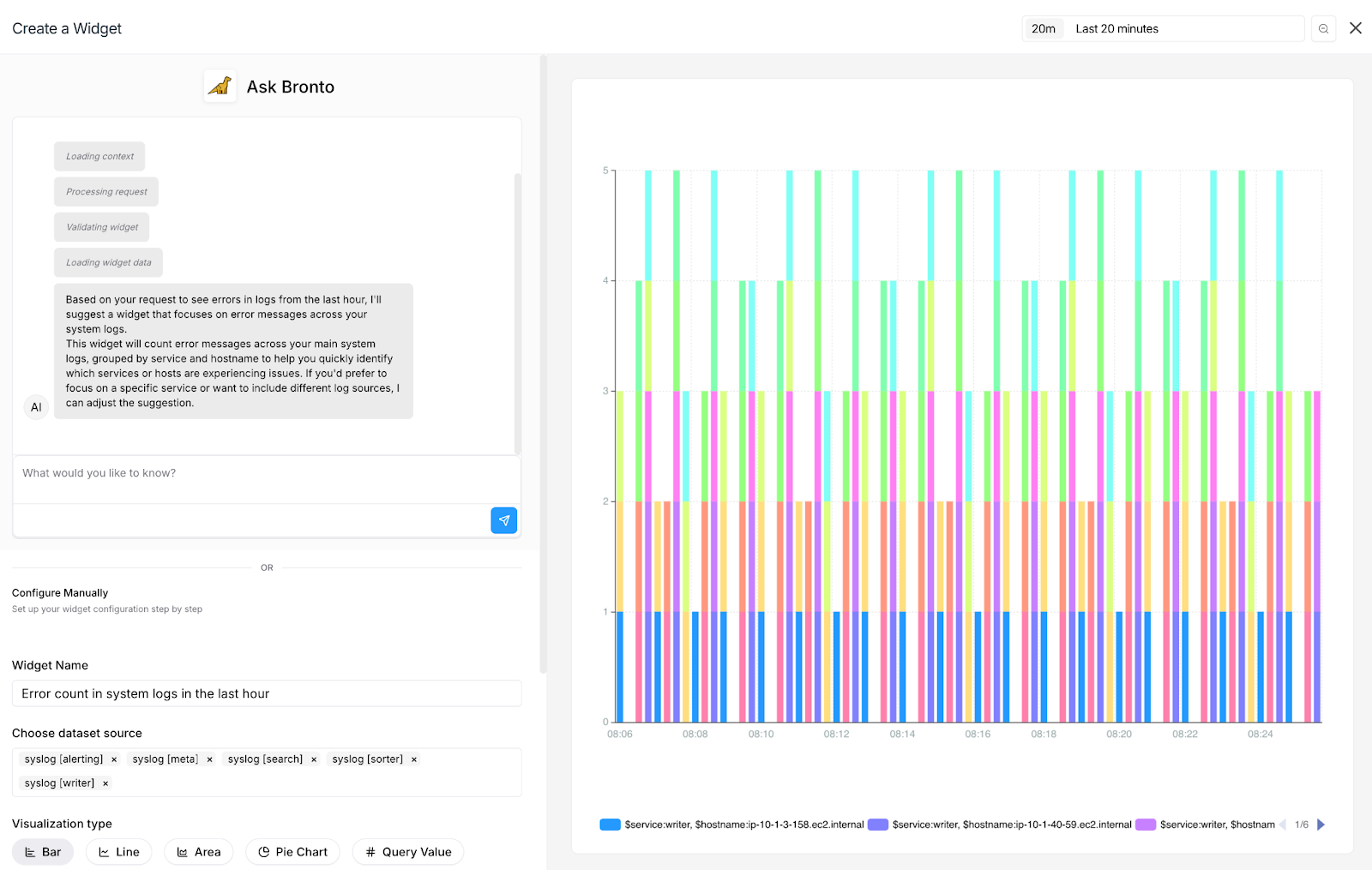

Within a few seconds we can see that the ai-assistant service loaded context, processed the user prompt, validated that the widget it’s about to create is what the user wanted, then replied with an explanation of the widget it has just created.

Design process iterations

Our goal for the widget creator was to build something that solved the problem of users not having the context needed to create a useful dashboard. We also wanted to significantly reduce the time taken to do it even if you have the context.

Initial proof of concept

The first iteration of the feature was a standalone ChatGPT like interface which lived on its own page. The user would initiate a conversation with the AI and describe what dashboard widget they wanted, and after some back and forth would get a link to go to the dashboard and view the widget.

This didn’t hit the mark though – the feedback loop was too long and there was still work involved on the user’s part to do a manual verification which was in a separate UI to the current conversation.

Pivoting to something simpler

Ultimately we found that the most seamless experience was to integrate our AI assistant into the Create Widget flow and make it an optional enhancement for users that don’t have the context to create them manually.

Creating a widget requires the following:

- One or more datasets -– this is the source of the log data to be visualized.

- A visualization type – supported types include Area, Bar, Line, Pie, Tree Map, Top List and more

- Statistical aggregation – this is the metric definition where a function will be chosen, e.g. Count, Average, Sum, Unique etc. And then the data point to measure, which is usually a key in the log data, for example “duration_millis”.

- Finally a name for the widget

Optionally, a query filter can be applied against the log data as well as a grouping function.

So overall the process to create effective widgets can be quite involved. Even small things like choosing a sensible name and following consistent naming patterns is toil that can and is eliminated by Bronto. (See our blog post here - https://www.bronto.io/blog/naming-is-hard-except-at-bronto - for more reading on how we tackle the naming problem).

By having both the original form and new AI prompt in the same UI it allows the user to start with their prompt describing what they want, then the AI will fill the form fields below and the preview automatically updates. If the result is what the user expected, they simply save the form and that’s their widget created. If it’s not quite what they wanted they can either manually tweak things, or continue to chat with the Bronto assistant to give it more context.

This feedback loop is much tighter because the user instantly sees the results from the AI, and it’s easy for them to follow up with more prompts if the original result isn’t quite right.

Under the hood: Technical details

The user experience for the AI assisted widget creation is a pattern that could be used in other parts of the product, and ultimately we want the technical decisions we make for building these features to scale for future work too.

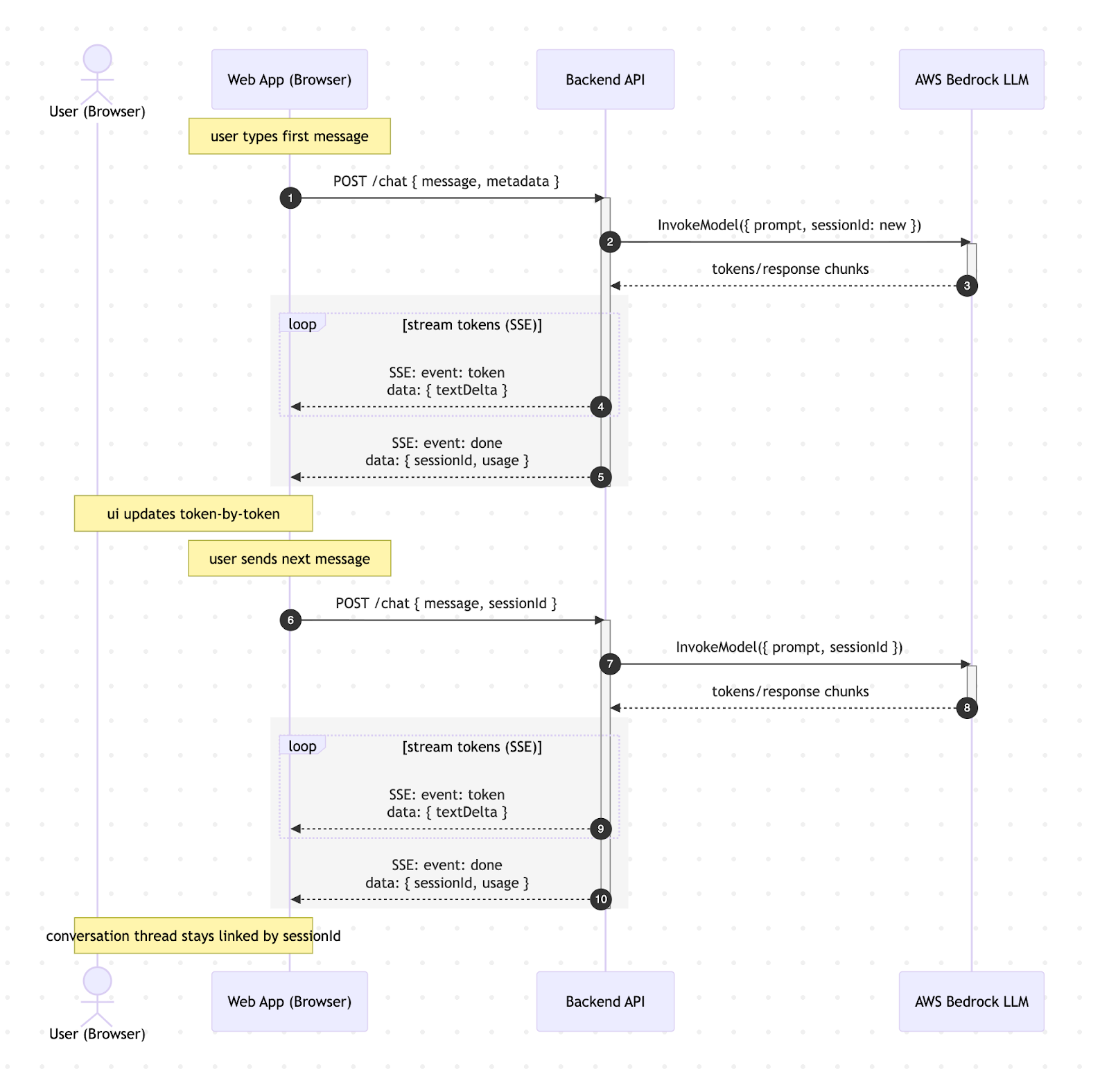

The core piece of the user experience is seeing updates in real time. As soon as a user sends their prompt they receive feedback about what the AI is currently doing. Messages stream from the backend to the frontend allowing the user to see the current step the AI is executing.

Backend orchestration

Our infrastructure at Bronto is built on AWS and we are leveraging the Bedrock service to use LLMs in our backend. An ai-assistant service is what powers the widget creation requests, where each time a user asks for a widget to be created we can invoke an LLM on Bedrock, passing along useful context about the users request, the data within that user’s org and general system prompts guiding the LLM.

Frontend integration

The Bronto Frontend streams the response from the ai-assistant service using Server Sent Events (SSE). We settled on SSE since the primary use case is to stream real time updates to the client. We could have opted for Web Sockets but there is extra overhead in managing such connections, and our use case only requires minimal communication from the client back to the server during the widget creation process. The solution for this is that every message the user sends is a POST request to our ai-assistant service. The first response will contain a sessionId which can be included in future requests to ensure the AI knows about the previous messages.

The Event Stream received by the client contains a consistent structure of message types that can be used across all AI integrated features, such as LOADING_MESSAGE, AI_RESPONSE_DELTA, QUERY_RESPONSE, RESPONSE_COMPLETED, and ERROR.

Building Context

As mentioned above, the backend service that invokes the Bedrock LLMs is passing along a context that will guide the LLM on the output that we want.

This context includes a full list of datasets within the users’ organization. Each dataset is tagged with metadata by our ingestion service, for example description tags with information on the type of data in the logs, the log format, and parsed key value pairs.

Visualizing log data in Bronto dashboards can leverage our SQL based query language. The ai-assistant service will also send documentation on our query language syntax as well as example queries for common widgets.

Finally we have a system prompt. This is a carefully curated prompt that is hidden from the user and included in each request to the LLM. These are the instructions which will guide the LLM and tell it how to interpret and respond to the user prompts. We are continually tweaking the system prompt based on real feedback which allows users to send vague prompts but still get useful results.

What’s next

Our goal at the start of this project was to have a capable AI assistant integrated into the product which could provide real value to users without them going to much effort, or having all the context of their organization’s log usage.

The widget creator has laid the foundation for us to integrate other parts of the product with AI assistants that are not intrusive, reduce toil and provide real value with little effort. Moving forward we plan to leverage the work done here to bring AI to other parts of Bronto.

We have the parts in place to take a user prompt from the client, pass it to an LLM with a curated system prompt and other useful contextual information, and be able to stream updates back to the user in real time.

.png)

.png)