Today we are introducing BrontoScope, which is one of the Bronto AI Labs initiatives to reduce user toil, increase team efficiency and reduce MTTR.

In recent years, there has been huge progress in the development and use of LLMs, thanks to breakthrough innovations in generative AI and an unprecedented amount of investment in hardware and research. The unique ability of LLMs to handle non-deterministic problems has opened the door to automating a huge amount of tasks that would otherwise have required human effort.

Almost every software house is adding AI features to their products, often with mixed results. As a user, I'm often annoyed by the continuous stream of AI features popping up everywhere, such as messaging apps that want you to chat with an LLM while you are looking for your friends, social media apps suggesting adjustments to your photo or writing your post, search engines providing an LLM answer as the first result, leaving you wondering if what you are reading is true or a hallucination.

The Observability space is no exception, where many products are being “enriched” with AI driven features, but, in my opinion, most of these features are missing the point. Let me explain why.

Observability has always been hard, as a production system can easily produce TBs of logs, millions of traces and metrics every hour. This is too much data for any human to easily inspect. Observability products try to address the challenge of storing, searching and summarizing this huge volume of data and transforming it into something human readable. Dashboards, charts, queries, monitors have been the answer so far.

LLMs should be the next pillar in observability to reduce the burden on users, improve system reliability and reduce MTTR, but only if focused on making the user’s life simpler. Unfortunately, many of the features that have been introduced are missing the point and are in fact making the user’s life harder by:

- Requiring a detailed prompt as input. To receive well-structured and detailed responses, users must invest significant time and effort in crafting prompts.

- Producing long text responses. LLMs tend to produce verbose responses, especially in technical areas, if they are not aware of the knowledge of the reader or if the prompt is not specific enough for the LLM to write structured summaries, that get to the point in a few lines. Even if we assume the best case scenario where the AI has nailed down the user request, the response is often diluted in lines and lines of text.

- Taking a long time to provide the final answer. Complex workflows, often with several back and forth interactions with the LLM and the data, slow down the response, leaving the user waiting too long for answers.

The Bronto Approach

At Bronto, we are excited to extend the Bronto logging platform with capabilities using LLMs with the goal of automating recurring work patterns, reducing manual operations and user toil, speeding up the setup and improving the product usability, i.e. to make the user’s life simpler and not harder.

Our Bronto Labs initiative is beginning this process of expanding our logging platform with the following set of tools, where we will collaborate with users to see what works best for them and to guide the development of new applications such as:

- Auto-Parsing logs using AI

- AI Dashboard Creation

- BrontoScope to investigate particular errors.

The philosophy behind this initiative is to maintain a user focus - before adding any new feature to the product, we want to make sure it is going to be useful for most of the users and not slow down or hinder any of the user’s tasks. This post will consider BrontoScope and future ones will consider the “Auto-Parser” and “AI Dashboard Creator”.

BrontoScope

Incidents can happen outside working hours, when only one or a few on-call engineers are available. The experts in the area affected by the problem may be offline, at least during the initial phase of the investigation.

The first steps are usually:

- Understand the scope of the incident

- Estimate the impact for the customers and the rest of the system

- Assign a priority to the incident and decide how and when to tackle the issue

Staying calm, thinking clearly and acting quickly are all required, even when the alarm has woken the unlucky engineer(s) in the middle of the night. When an incident happens, time is precious to mitigate/solve the issue and establish the root causes, but too much haste and rushing to conclusions leads to incorrect handling of the issue.

LLMs can help a lot in such scenarios as they can summarize relatively large amounts of data in seconds and they are not affected by panic or confusion due to lack of sleep or unexpected wake-up calls at 3:AM.

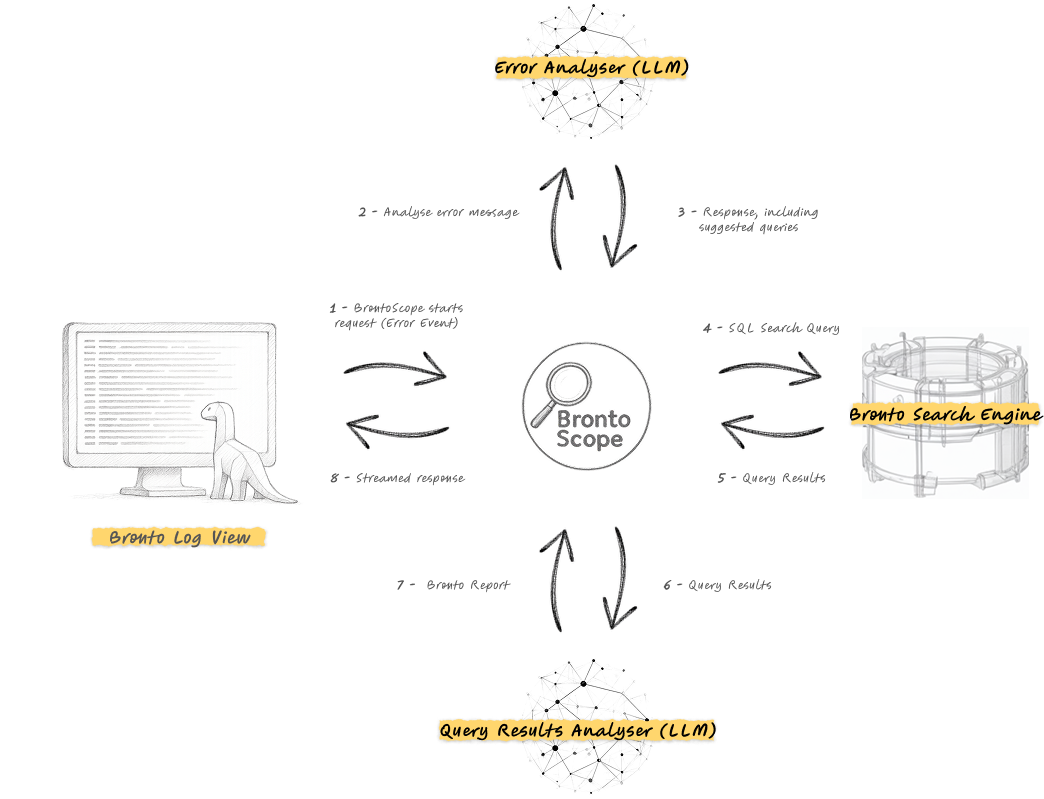

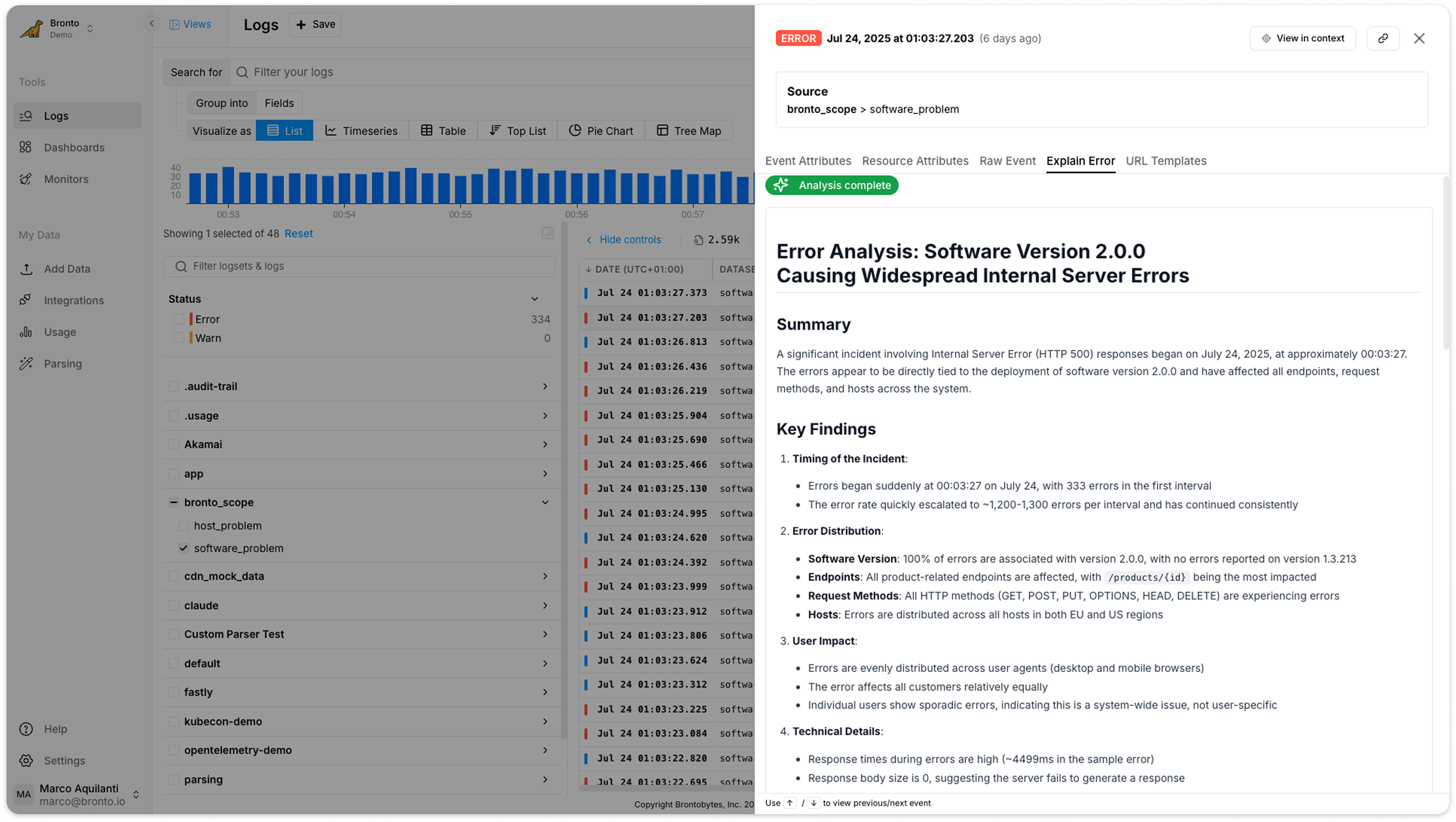

BrontoScope has been developed to use LLMs to speed up the incident investigation process. It requires a single click on an error event in the logs, to automate the incident investigation. The LLM writes and runs tens of queries against your data. The system analyzes query results, generates a summary report, and delivers it to the user in just a few seconds.

The report includes:

- An estimation of the scope of the problem, including when the given errors started appearing in the logs and which user, customer, service, region or host are affected

- The possible causes of the error, such as the lack of resources, network problems, software bugs, traffic spikes etc

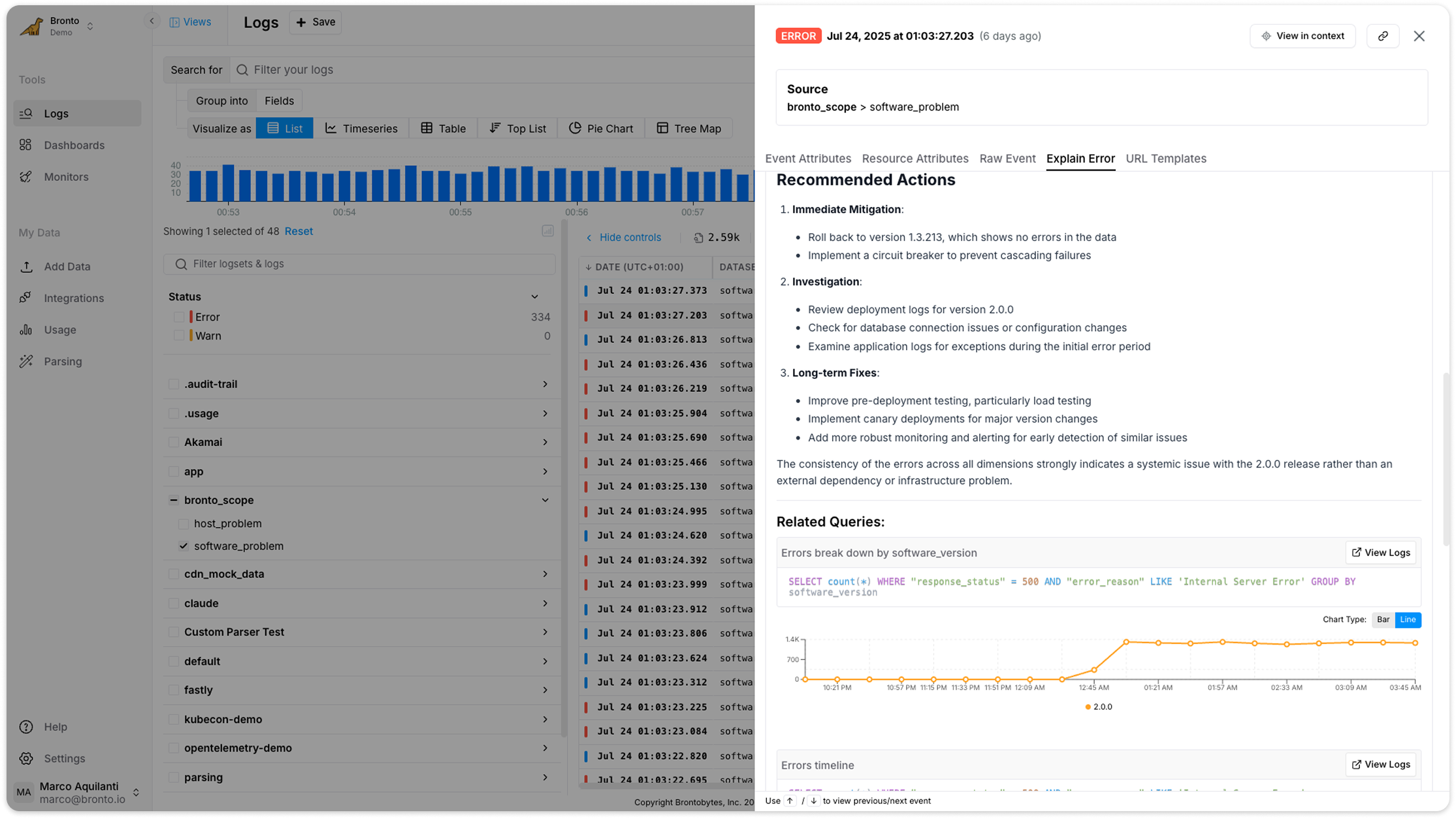

- Suggestions on how to stop the error occurring or how to continue the investigation

- The data that led the LLM to the conclusions and recommendations is provided. In particular, the most significant query results and charts, chosen by the LLM (but not manipulated) are given, so that the user can validate the report conclusion and confirm that the model is not hallucinating.

Bronto Scope was designed to deliver fast response times, particularly when compared to similar products, while processing large volumes of data from multiple angles. This low latency is achieved by minimizing the number of LLM requests, so reducing the frequency of handoffs between the agent and data.

The process works in stages: first, the LLM analyzes the error and its surrounding context to guide subsequent data retrieval. The search engine then queries the relevant data and presents all findings to the LLM in a single, comprehensive prompt. Essentially, the LLM draws its conclusions from an ad-hoc dashboard, built around the error and composed of many charts. The final response is delivered to the users via Server-Sent Events, allowing them to read the output as it's being generated in real-time.

Bronto Scope is powered by AWS Bedrock's most advanced AI models, ensuring that all data is processed within the AWS ecosystem and that prompts and responses aren’t stored or shared with model providers or other third parties.

BrontoScope meets our goal of making the user’s life easier as:

- The user does not have to write a prompt to start an incident investigation. The user just selects a log event and the LLM does everything else: it analyses and understands the error, writes a filter to identify similar occurrences and scans the data autonomously, eliminating any effort from the user perspective.

- The LLM writes a concise report that goes straight to the point. Charts are included in the response to maximise the information delivered to the user.

- In most cases, the report is streamed to the user in less than 10 seconds. Tens of queries are usually run in each BrontoScope investigation, taking a few seconds in the worst case, thanks to the incredible speed of the Bronto search engine.

BrontoScope is currently available on request and it is being utilized internally by the Bronto team as well as by a number of our design partner customers in real world situations. Improvements will be made in the coming months.

This is just one of the AI features being developed at Bronto, stay tuned for future posts for exciting news or join our AI initiative and help us make your life easier!

Want to try out Bronto for lightning fast, always accessible logs with years of retention at a fraction of the cost of your current provider - sign up here!

.png)

.png)

.png)